Testing out OpenSfM for point cloud generation

Recently I’ve been looking into Structure from Motion (SfM) techniques quite a bit, as I’m planning a larger mapping project utilising SfM (previously I crafted a data gathering unit for the project). In the end I’m quite sure that I will need to create custom SfM solutions, since the existing open source SfM implementations are not quite optimal for my use case. My mapping scenario will utilise relatively high FPS video, as well as sensor fusion with an IMU. Most SfM libraries seem to be designed for scenarios, where a single object of interest is captured with a handful of images from a wide variety of angles. However, before jumping into creating my own SfM solutions, I figured it would be appropriate to play around with existing solutions for a bit. I ended up playing around with OpenSfM for a bit, since it’s seemingly the most established open source SfM library available.

OpenSfm usage

Using the OpenSfM library is really simple. You just need to create an empty project directory, in which you create a sub-directory named images. Insert your images in this directory. To carry out the full processing scheme, execute the following command from your OpenSfM main installation directory:

bin/opensfm_run_all *path-to-project-directory*

In case you want to change the default configurations of the processing scheme, you need to create a config.yaml file in your project directory. Edit this file accordingly before executing the processing. In my tests, I changed the depthmap_resolution parameter to a value of 1280, in order to acquire higher resolution point clouds.

Tests and results

I tested OpenSfM in four different scenarios, with a focus on outdoor scenery. Each test consisted of 10-20 images captured with my mobile phone, at a resolution of 4160x3120 pixels.

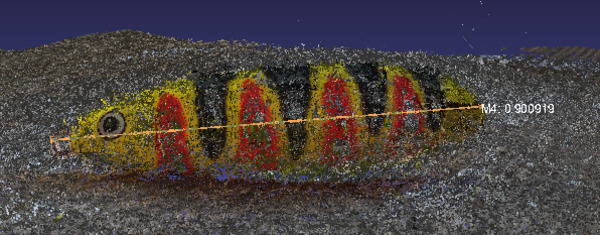

I started with a basic test in a plain and controlled environment: one of my fishing lures placed on the floor. The lure contains distinct colourful patterns, making it a somewhat ideal target for photogrammetry. Below images showcase samples of the captured images, as well as the resulting point cloud. The result seems quite convincing, the point cloud representing the lure fairly accurately. However, the inner part of the lure, without any pattern, was clearly not so well captured.

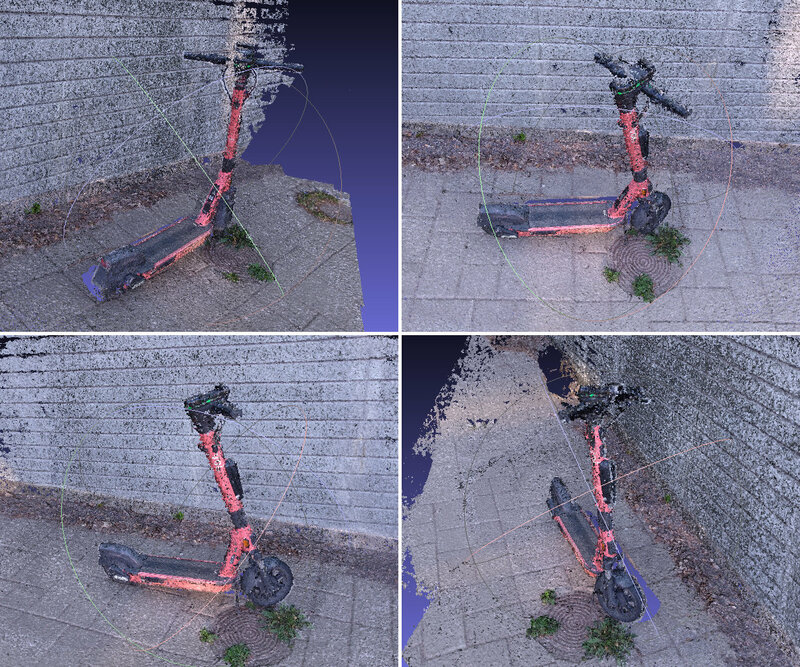

The subject of the second test was an electric scooter against a plain background. The resulting point cloud was again quite accurate. Samples from photos and the point cloud provided below.

The next test introduced a spike in difficulty, with the target being an excavator at a construction site. Most images featured large portions of background. The background contained plethora of varying detail, yet not many dependable landmarks visible throughout the images. The excavator itself featured multiple parts with uniform appearance, and little detail. These factors probably introduced errors in the feature matching. Looking at the result below, the point cloud generation didn’t succeed as well as in the previous tests. There is quite a bit more noise in the point cloud, making it difficult to perceive the shape of the excavator.

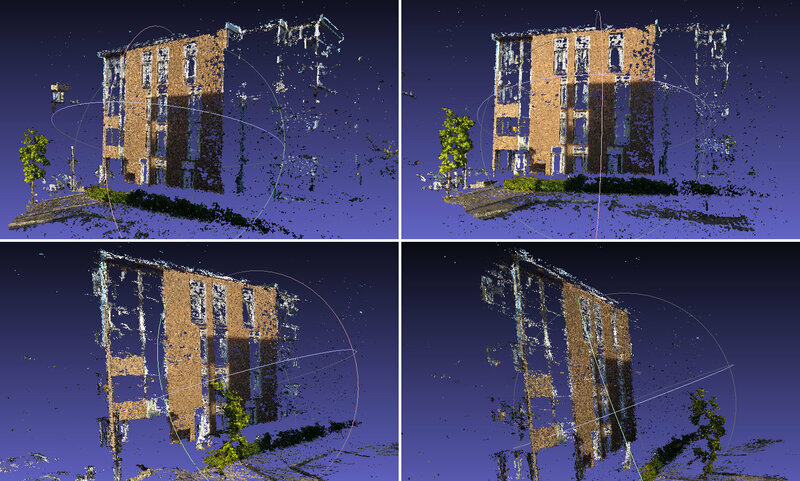

The last test was capturing the facade of an apartment building. Since the lighter parts of the facade appeared remarkably plain in the images, these parts were poorly captured in the point cloud. The stereo matching was most likely unable to process these regions properly. The results are again presented below.

Discussion

The resulting point clouds contained varying amounts of noise, such as a “fog” of incorrectly placed outlier points all over the point cloud, as well as “holes” in the object shapes. Applying basic filtering and denoising techniques, the quality of the point clouds could be notably improved. Especially the noise presented by the individual outlier points around the point cloud should be quite easy to remove.

As a final note, it should be pointed out that the scale of the presented point clouds is incorrect. The dimensions of the point clouds are not in any meaningful real-world units. This is a limitation of monocular SfM, which cannot determine the absolute scale of the scene without additional clues. This is evident when measuring the presented point clouds. In the real world, the length of the analysed lure is approximately 5 cm. The lure measures at 0.9 units in the point cloud. This is highlighted in the image below.