Testing Out IMX219 Stereovision Camera with Jetson Nano

I’ve always been a sucker for using CSI cameras with SBCs, they are my go-to solutions when experimenting with Raspberry Pi or Jetson Nano. In my experience these cameras work flawlessly, and offer impressive image quality (as well plethora of settings for adjusting the image acquisition). So it only makes sense I would test out a stereovision module consisting of two CSI cameras.

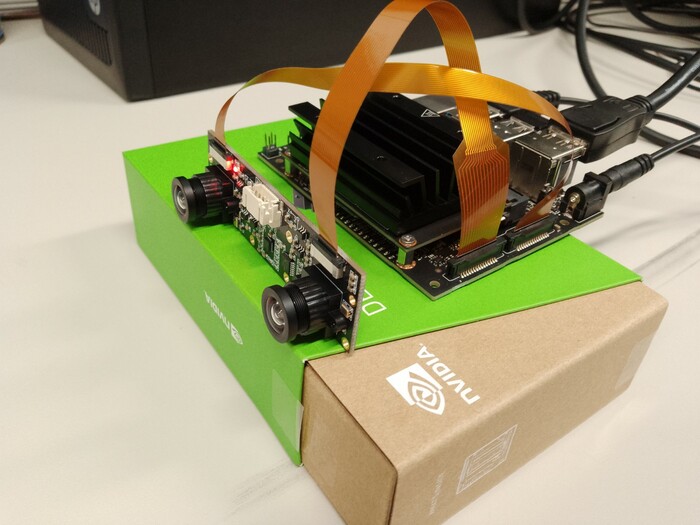

Hardware Setup

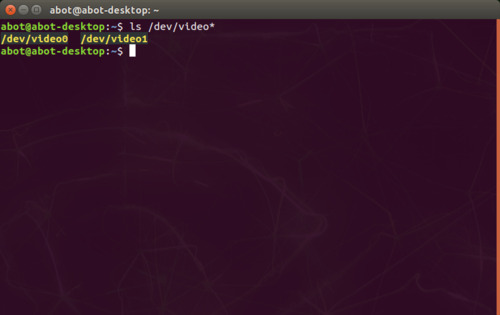

I selected the IMX219 stereo module for its low price and wide angle lenses. The IMX219 camera sensor is the same one as in the Rasperry Pi Camera v2, so essentially the module is just two of these sensors rigidly attached together, and with wide angle lenses. For indoor applications, I often feel that the regular lenses, approx 90 degrees in FOV, are too narrow. The module also has an IMU on-board, which can be used via I2C. However, for now my focus was on the cameras themselves. The module was straightforward to connect to the Nano, two flex cables from the cameras that connect to the respective ports on the nano. Or so I thought. After wondering for a while why I wasn’t seeing any video inputs in /dev/, I swapped the cables to different ports. Apparently the cable coming from the camera with the red LED had to be connected to the CAM0 port on the Nano. Afterwards, the video inputs appeared appropriately.

Software for Testing

After testing a couple of different methods for acquiring frames from the cameras, I finally found an appropriate GStreamer command for checking the camerafeeds.

gst-launch-1.0 nvarguscamerasrc sensor_id=0 ! 'video/x-raw(memory:NVMM),width=3280, height=2464, framerate=21/1, format=NV12' ! nvvidconv flip-method=2 ! 'video/x-raw, width=816, height=616' ! nvvidconv ! nvegltransform ! nveglglessink -e

Changing the sensor_id-parameter allowed me to switch between the cameras.

Initially the pictures taken with the cameras had a weird red glow on the edges. This was fixed by setting some overrides provided by the manufacturer.

wget https://www.waveshare.com/w/upload/e/eb/Camera_overrides.tar.gz

tar zxvf Camera_overrides.tar.gz

sudo cp camera_overrides.isp /var/nvidia/nvcam/settings/

sudo chmod 664 /var/nvidia/nvcam/settings/camera_overrides.isp

sudo chown root:root /var/nvidia/nvcam/settings/camera_overrides.isp

Future Development

Next steps with the module involve creating OpenCV-python functions for reading the cameras, performing stereocalibration, and extracting depth maps in real-time (>30 fps). The process should be quite straightforward, as GStreamer-based OpenCV functions for reading the CSI-cameras are available. OpenCV offers great tools for stereo calibration and acquisition of depth based on disparity, so this shouldn’t be a problem either. The ultimate goal is to create a ROS node capable of providing the individual camera views as well as the depth map.

I’ll be interested to see the capabilities of the stereo module in depth estimation. Since the module doesn’t include any fancy add-ons for alleviating the difficulty of the stereo matching, eg. an IR projector, the reliability of the depth map could be limited in some sceneries. Furthermore, the wide angle lenses could prove difficult to calibrate. My intention is to apply a pinhole camera model with basic distortion parameters. In case the results do not seem adequate, I’ll have to look into fisheye calibration techniques. Lastly, I’m also interested in seeing the computational burden that the stereo matching introduces on the Jetson Nano. My hunch is that the image resolution will have to be reduced by quite a bit in order to achieve real-time performance, while leaving computational resources of the Nano available for other applications as well.